Enhancing Web Application Performance with Caching

Memory is a constant bottleneck for large, busy applications. Effective caching strategies can both lower the memory footprint and speed up the application. Caching is a well known optimization technique because it keeps items that have been recently used in memory, anticipating that they will be needed again.

Introduction

Memory is a constant bottleneck for large, busy applications. It is also the area in web development where the most abuse occurs and where the most benefit may be gained. In some cases, effective caching strategies can both lower the memory footprint and speed up the application. Caching is a well-known optimization technique because it keeps items that have been recently used in memory, anticipating that they will be needed again. Caching can be implemented in numerous ways, including the judicious use of design patterns.

Caching with the Flyweight Design Pattern

The Flyweight pattern appears in the Gang of Four book, which is the seminal work on patterns in software development. It uses sharing to support a large number of fine-grained object references. Flyweight is a strategy in which you keep a pool of objects available and create references into the pool of objects for particular views. It uses the idea of canonical objects. A canonical object is a single representative object that represents all other objects of that type. For example, if you have a particular product, it represents all products of that type. In an application, instead of creating a list of products for each user, you create one list of canonical products and each user has a list of references into that list.

The default eMotherEarth application in Art of Java Web Development is designed to hold a list of products for each user. However, that is a waste of memory. The products are the same for all users, and the characteristics of the products change very infrequently. Figure 1 shows the current architectural relationship between users and the list of products in the catalog.

Figure 1 In the eMotherEarth application , each user has their own list of products when they view the catalog. However, even though they have different views of them, they are still all looking at the same list of products.

The memory required to keep a unique list for each user is wasted. Even though each user has their own view of the products, there is only one list of products. Each user can change the sort order and what page's worth of products they see in the catalog page, but the fundamental characteristics of the product remain the same for each user. A better design would be to create a canonical list of products and hold references into that list for each user. This user/product relationship appears in figure 2.

Figure 2 A single list of product objects saves memory, and each user can keep a reference into that list for the particular products they are viewing at any give time.

In this scenario, each user still has a reference to a particular set of products (to maintain paging and sorting), but the references point back to the canonical list of products. This main list is the only actual product objects present in the application. It is stored in a central location, accessible by all the users of the application.

Implementing Flyweight

The eMotherEarth application is featured in Art of Java Web Development. It is a modular Model 2 web application, making it is easy to change to use the Flyweight design pattern. The only code shown here revolves around changing boundary and controllers classes to implement caching. To download the entire application, you can go to www.nealford.com/art.htm to access the book's source code archive. The first step is to build the canonical list of products and place it in a globally accessible place. The obvious choice is the application context. Therefore, the Welcome controller in eMotherEarth has changed to build the list of products and place them in the application context. The revised init() and new buildFlyweightReferences() methods of the Welcome controller appears in listing 1.

Listing 1 The Welcome controller builds the list of flyweight references and stores it in the application context.

public void init() throws ServletException {

String driverClass =

getServletContext().getInitParameter("driverClass");

String password =

getServletContext().getInitParameter("password");

String dbUrl =

getServletContext().getInitParameter("dbUrl");

String user =

getServletContext().getInitParameter("user");

DBPool dbPool =

createConnectionPool(driverClass, password, dbUrl,

user);

getServletContext().setAttribute("dbPool", dbPool);

buildFlyweightReferences(dbPool);

}

private void buildFlyweightReferences(DBPool dbPool) {

ProductDb productDb = (ProductDb) getServletContext().

getAttribute("products");

if (productDb == null) {

productDb = new ProductDb();

productDb.setDbPool(dbPool);

List productList = productDb.getProductList();

Collections.sort(productList, new IdComparator());

getServletContext().setAttribute("products",

productList);

}

}

The buildFlyweightReferences() method first checks to make sure that it hasn't been built by another user's invocation of the welcome servlet. This is probably more cautious than necessary because the init() method is only called once for the servlet, as it is loaded into memory. However, if we moved this code into doGet() or doPost() , it would be called multiple times. This is an easy enough test to perform and it doesn't hurt anything in the current implementation. If the canonical list doesn't exist yet, it is built, populated, and placed in the global context. Now, when an individual user needs to view products from the catalog, they are pulling from the global list. The Catalog controller has changed to pull the products for display from the global cache instead of creating a new one. The doPost() method of the catalog controller appears in listing 2.

Listing 2 The Catalog controller pulls products from the global cache rather than building a new list of products for each user.

public void doPost(HttpServletRequest request,

HttpServletResponse response) throws

ServletException, IOException {

HttpSession session = request.getSession(true);

ensureThatUserIsInSession(request, session);

List productReferences =

(List) getServletContext().getAttribute("products");

int start = getStartingPage(request);

int recsPerPage = Integer.parseInt(getServletConfig().

getInitParameter("recsPerPage"));

int totalPagesToShow = calculateNumberOfPagesToShow(

productReferences.size(), recsPerPage);

String[] pageList =

buildListOfPagesToShow(recsPerPage,

totalPagesToShow);

List outputList = getProductListSlice(productReferences,

start, recsPerPage);

sortPagesForDisplay(request, outputList);

bundleInformationForView(request, start, pageList,

outputList);

forwardToView(request, response);

}

The previous version of the Catalog controller called a method to create and populate a ProductDb boundary class. However, this version is simplified because it can safely assume that the product records already exist in memory. Thus, the entire getProductBoundary() method is no longer present in this version of the application. This is a rare case of less code, faster performance, and less memory! However, one other minor change was required to accommodate the caching. Previously, the sortPagesForDisplay() method did nothing if no sorting criteria was present in the request parameter it simply returned the records without sorting them. The controller is designed to return a slice of the canonical list in the getProductListSlice() method.

private List getProductListSlice(List productReferences,

int start, int recsPerPage) {

if (start + recsPerPage > productReferences.size()) {

return productReferences.subList(start,

productReferences.size());

} else {

return productReferences.subList(start,

start + recsPerPage);

}

}

Previously, the lack of a sort criteria didn't cause any problems because every user had their own copy of the "master" list. This method returned a subset of that user's list. However, now all users are sharing the same list. The subList() method from the collections API does not clone the items in the list, it returns references to them. This is a desirable characteristic because if it cloned the list items as it returned them, caching the product list would be pointless. However, because there is now only one actual list, the members of the list are sorting in page sized chunks as the user gets a reference to some of the records in the list and applies the sortPagesForDisplay() method.

private void sortPagesForDisplay(HttpServletRequest request,

List outputList) {

String sortField = request.getParameter("sort");

Comparator c = new IdComparator();

if (sortField != null) {

if (sortField.equalsIgnoreCase("price"))

c = new PriceComparator();

else if (sortField.equalsIgnoreCase("name"))

c = new NameComparator();

}

Collections.sort(outputList, c);

}

The previous version of sortPagesForDisplay() only called the sort method if the user had specified a comparator in the request parameter (which is generated when the user clicks on one of the column headers in the view). However, if that implementation remained, then a new user logging into the application would get the same sorted list as the last user to sort the page sized chunks of records. This is because a new user hasn't specified a sort criteria (in other words, they haven't had a chance yet to click on a column header and generate the sorting flag). The side effect of this caching technique is that every user is sorting the list of products in page-sized chunks. Even though that is changing the position of records for a given page sized chunk, each user applies their own sorting criteria to the list before they see records. This implementation could be improved to prevent this side effect but, with a small number of records, it doesn't hurt the performance.

One characteristic of this controller makes it easy to retrofit to use this design pattern. The user chooses their page of records before sorting them. If the sorting occurred before the user chose which subset of records they wanted, this controller would have to be changed. However, it is unlikely that the user would make such a request - they would have to guess on which page their sorted record ended up.

Flyweight Considerations

The effectiveness of the flyweight pattern as a caching mechanism depends heavily on certain characteristics of the data you are caching.

- The application uses a large number of objects.

- Storage (memory) cost is high to replicate this large number for multiple users.

- Either the objects are immutable or their state can be made external.

- Relatively few shared objects may replace many groups of objects.

- The application doesn’t depend on object identity. While the user may think they are getting a unique object, they actually have a reference from the cache.

One of the key characteristics enabling this style of caching is the state information in the objects. In the example above, the product objects were immutable as far as the user is concerned. If the user is allowed to make changes to the object, then this caching scenario wouldn't work. It depended on the object stored in the cache being read-only. It is possible to store non-immutable objects using the Flyweight design pattern , but some of their state information must reside externally to the object. This appears in figure 3.

Figure 3 The Flyweight design pattern supports mutable objects in the cache by adding additional externalizable information to the link between product and reference.

It is possible to store the mutable information needed by the reference in a small class that is associated to the link between the flyweight reference and the flyweight object. A good example of this type of external state information in eMotherEarth is preferred quantity for particular items. This is information particular to the user, so it should not be stored in the cache. However, there is a discrete chunk of information for each product. This preference (and others) would be stored in an association class , tied to the relationship between the reference and the product. When you use this option, the information must take very little memory in comparison to the flyweight reference itself. Otherwise, you don't save any resources by using the flyweight.

The flyweight design pattern is not recommended when the objects in the cache change rapidly or unexpectedly. It would not be a suitable caching strategy for the eMotherEarth application if the products changed several times a day. However, with its inventory, that seems unlikely. This solution works best when you have an immutable set of objects shared between most or all of your users. The memory savings are dramatic and become more pronounced the more concurrent users you have. Which brings up an interesting question -- how do you know that the caching helps? With many of the newer Java virtual machines, caching isn't necessary because of the efficiency of the garbage collector and the memory manager. It is always a good idea to measure changes to make sure they are worthwhile.

Was It Worth It?

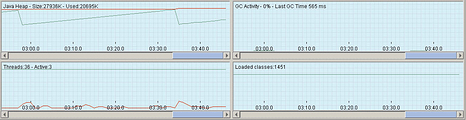

To check to see if the changes actually improved the performance of the application, you need to measure both the old performance and the new. When dealing with caching, the two characteristics that should interest you are heap memory used (it should shrink) and the activity of the garbage collector (it should work less). You need to measure it under real (or as close to real) conditions, with multiple users. To test this application, I used a combination of JMeter and Borland's OptimizeIt Profiler.

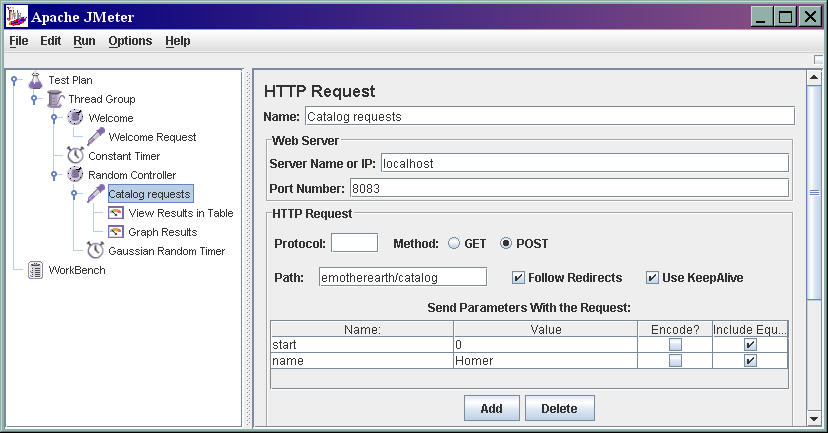

In JMeter, I set up a test plan using a Once Only Controller to access the Welcome page (and thus firing the Welcome controller, which sets up the cache) and a Random Controller to randomly access the Catalog page. I setup 25 threads, each running for 100 iterations, with a random timer firing requests every 1/2 second. These seemed like reasonable numbers to test the behavior of the application. The JMeter setup appears in figure 4.

Figure 4 The JMeter setup fired requests from 25 users randomly for the Catalog page.

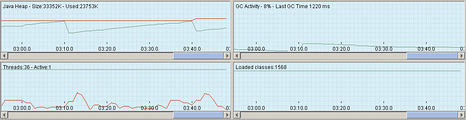

To measure the results of this load, I used OptimizeIt, which features a view that includes the heap size and garbage collector activity over time. First, I ran the unadorned application (i.e., without caching) to get baseline measurements of its performance. I took a snapshot of the state of the virtual machine at about the 3 minute mark, which allowed enough time for the application to reach a steady state. This baseline result appears in figure 5.

Figure 5 The baseline shows the heap size and garbage collector behavior at 3 minutes into the test.

This figure shows that the heap size is 33352K, with 23753K used. This resulted with no special setting on the max heap size for the virtual machine. In other words, this is how much the heap has grown on its own. You can also notice that the garbage collector is running 8% of the time.

Figure 6 shows the same view of the state of the virtual machine at about the same time, using the caching version of the application.

Figure 6 The caching version shows improved memory usage and better garbage collection characteristics.

For the caching version of the application, the heap size and memory used are both smaller. Both snapshots show the characteristic Java saw-tooth pattern, as memory is allocated, then garbage collected. The garbage collector is hardly working at all in this snapshot. This is consistent with what you would expect with the Flyweight caching implementation. Objects are being reused rather than allowed to be garbage collected.

Summary

Adding caching to an application isn't a guaranteed way to improve performance. You should determine that an improvement is needed before you add the extra complexity to the application. To determine whether it is needed and if it is working, you should measure the actual performance of the application. It is possible to degrade your application by adding more complexity. Remember the quote from Donald Knuth: "We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil ."

About the Author

Neal Ford is the chief technology officer at The DSW Group Ltd. in Atlanta, GA. He is an architect, designer, and developer of applications, instructional materials, magazine articles, and video/DVD presentations. Neal is also the author of Developing with Delphi: Object-Oriented Techniques (Prentice Hall PTR, 1996), JBuilder 3 Unleashed (SAMS Publishing, 1999), and Art of Java Web Development (Manning 2003). His language proficiencies include Java, C#/ .NET, Ruby, Object Pascal, C++, and C. Neal's primary consulting focus is the building of large-scale enterprise applications. He has taught on-site classes nationally and internationally to all phases of the military and many Fortune 500 companies. He is also an internationally acclaimed speaker, having spoken at numerous developers conferences worldwide.