Do AI capabilities enhance or impair human cognition?

I recently asked a high school math teacher the following question: If you asked your students to add up a column of numbers, would most be able to do so without the aid of a calculator? The answer was: Nope.

A few generations years ago, this wasn’t the case. Students could indeed sum up a list of numbers without a calculator — I was one of those students.

And therein lies a potential but significant problem.

While calculators make it easier and faster to add numbers accurately, their constant use can diminish a human’s ability to perform the very calculations the device is designed to emulate. The machine helps us work faster, but maybe not to be smarter. Over the long term, the device might actually make us dumber.

The same can be said of artificial intelligence in general.

The conundrum of calculators

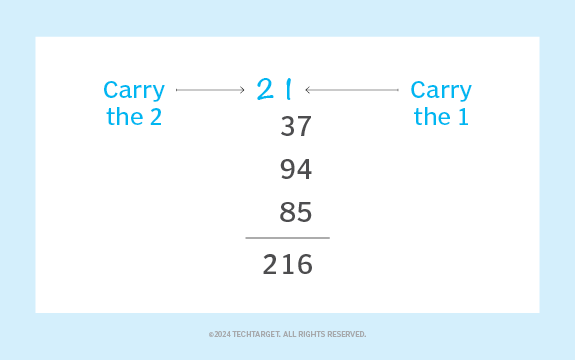

When children first learn to add up a list of numbers without using a calculator, one technique they’re taught is the carryover method. With this method, a child adds up a column of digits according to each number in a list, starting with the right-most column of the given number. If the total of the digits in the column is greater than nine, then only the first digit of that column’s sum is written down; the remaining digits are carried over to the next column of digits.

The figure below shows how this works. The first column of digits on the right side adds up to 16, but the child writes down value 6 below the horizontal sum line and carries over the value 1 to the next column. That value is included when adding up the digits in the next column to the left – and if that sum value again exceeds nine, the child writes down that single digit and carries over the other digits to the next column, as done previously. This process continues for as many columns as needed to complete the operation.

Granted, it’s a tedious, error-prone process. However, after years of using the carryover method a child develops an intuition about how number systems work. Later educational grades formally teach students about various number systems, such as how to express a value in both decimal/base-10 and hexadecimal notation. Having such intuition at the start makes learning easier.

While using a calculator to do sums enables the child to work faster and more accurately, there is a cost. When a child learns to use a calculator rather than apply a technique such as the carryover method, they lose that skill of dissecting numbers according to their digits and thus don’t develop the intuition about the role of digits in a number system. The child merely learns how to press keys for numbers and symbols to get the answer.

Essentially, the machine does the calculation, and the child learns data entry but has no direct interaction with the logic that drives the calculation. The child may never know or need to know that logic, as long as the calculator gives accurate answers.

That is, until the day the calculator malfunctions and the answers are wrong. Without the ability to perform calculations unassisted, the child will never know that the device is in error. Imagine the calculator fails and reports that 2 + 2 = 5, but the child takes the error for a fact with no ability to determine otherwise.

The risk of AI without human cognition

Handwringing about children and calculators might not be a big deal. A calculator’s intelligence is limited, and we’ve used them for decades to no apparent ill effect. But what if we apply the same concerns to the surging development around AI and machine learning?

Today’s machine intelligence has dramatically expanded far beyond simple hand calculators and into large-scale AI efforts. In many fields, the use of AI is as commonplace as hand calculators. And yet, the question remains the same: Does AI technology enhance or impair our ability to think?

Reliance on a hand calculator risks reducing a child to a data entry operator, so only the device has the cognitive ability to execute arithmetic operations. Broader use of AI might have the same potential effect: humans plug in data and AI is the sole agent capable of figuring out an answer.

To be clear, AI is great tool with many unique benefits and use cases. However, it should be just that – a tool, wielded by a human to assist in a given task. To unbalance that equation comes with real risks. What happens when the humans who possess the cognitive ability to perform operations that AI does are no longer around? For example, I can sum up a list of numbers without using a calculator, but many (particularly in younger generations) seemingly cannot. What happens when I, and others like me, leave this world — will the ability to do sums manually be lost forever?

The time may come when the workings of AI become so opaque that we can longer think on our own. A worse scenario may be this: what happens when our trusted AI fails? We could become like the child with a faulty calculator who goes through life thinking that two plus two does indeed equal five. A world in which that occurs, and is accepted, is not only wrong, it’s dangerous.