Sergey Nivens - Fotolia

JVM tuning vs. Java optimization: What's the difference?

JVM tuning and Java optimization sound similar, and both aim to boost application performance. But they take fundamentally different approaches to accomplish their goals.

The terms JVM tuning and Java optimization are often used interchangeably, but there is an important difference between the two practices. In short, JVM tuning requires some tradeoff between metrics like throughput and response time. Java optimization, meanwhile, improves the system overall, when successfully implemented.

Java virtual machine (JVM) tuning requires stable memory, processor speed, network bandwidth and hosted applications. This system must have an established history in terms of key performance indicators (KPIs). Users must have documented metrics such as throughput, latency, response time and maximum transactions per second. JVM, or application, tuning then involves making a change to a single parameter, configuration or environment variable, and then quantifying the impact the change has on the system.

On a properly configured JVM, performance tuning typically involves a tradeoff between different metrics. For example, when you increase the heap size, you reduce the number of garbage collection cycles. But this will increase the length of stop-the-world pauses. In a similar fashion, increasing the number of threads used by an application will likely increase contention blocks and cause deadlocks.

In a fully optimized Java environment, JVM performance tuning inevitably means improving one metric at the cost of another. It's a zero-sum game, although some configurations will provide for a better user experience and resource utilization than others.

JVM performance tuning targets

Common targets of JVM performance tuning routines include:

- Maximum heap size

- Minimum heap size

- Metaspace size

- Garbage collection types

- Log configuration

- Synchronization policies

- Page size

- Survivor ratio

- String compression

As you tweak these JVM tuning options, you'll see effects on various KPIs, including startup speed, method latency, throughput, garbage collection pause times, application jitteryness and transactions per second. The goal of tuning is to strike the optimal balance between these KPIs.

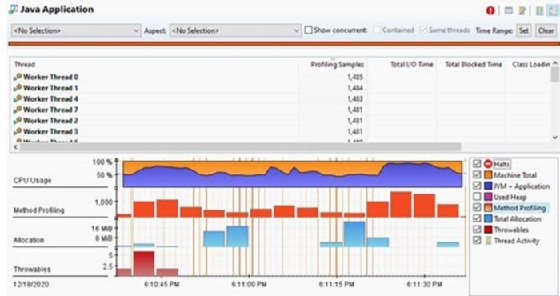

Java Mission Control can help performance problems and find ways to optimize the JVM.

JVM tuning vs. performance optimization

Performance optimization is different from JVM tuning.

Java optimization involves identifying underperforming or misbehaving code, frameworks, libraries, configurations and, potentially, even hardware. Once you identify a component as an optimization target, you then rework and retest the system. If the Java performance optimization target is successfully remediated, the system as a whole will perform better. There aren't any tradeoffs between metrics here. When you finish Java performance optimizations, your system should run better. Unlike JVM optimization, Java performance optimization is not a zero-sum game.

Java performance optimization steps

Optimization typically involves the following steps:

- Identify a potentially underperforming component.

- Garner runtime metrics with a JVM profiling tool such as Java Flight Recorder or VisualVM.

- Make changes to the identified component until its performance improves.

- Redeploy the application with the optimized Java component.

Java optimization targets

There are a variety of ways to optimize Java performance. One common method is to remove application bottlenecks. To do so, follow these steps:

- Use the most appropriate collection classes.

- Delete poor programing practices like primitive-type autoboxing.

- Reduce data returned from Hibernate and JDBC database calls.

- Eliminate synchronized methods, which cause thread blocks and deadlocks.

- Eliminate development practices that cause Java memory leaks.

JVM tuning and Java performance optimization are important activities in the application lifecycle. They maximize application performance and increase the throughput capacity of your local hardware or cloud computing resources. They should be a key part of an organization's DevOps strategy.